Machine Learning & Product: how to innovate

💡 JC’s Newsletter #143 - 2022-11- 01

👉 Instagram, TikTok, and the Three Trends (Stratechery)

❓Why am I sharing this article?

I really believe that we are at a stage where we are going to generate more and more with AI: text, images, videos, and product design.

I’m wondering as well how the evolution of usage towards autoplay can affect our UI. What would mean autoplay for us?

If there is one axiom that governs the consumer Internet — consumer anything, really — it is that convenience matters more than anything.

The first trend is the shift towards ever more immersive mediums. Facebook, for example, started with text but exploded with the addition of photos. Instagram started with photos and expanded into video.

Gaming was the first to make this progression and is well into the 3D era.

The next step is full immersion — virtual reality — and while the format has yet to penetrate the mainstream this progression in mediums is perhaps the most obvious reason to be bullish about the possibility.

Medium: Text ➡️ Images ➡️ Videos ➡️ 3D ➡️ VR

The second trend is the increase in artificial intelligence.

Over time this ranking algorithm has evolved into a machine-learning-driven model that is constantly iterating based on every click and linger, but on the limited set of content constrained by who you follow.

Recommendations are the step beyond ranking: now the pool is not who you follow but all of the content on the entire network; it is a computation challenge that is many orders of magnitude beyond mere ranking (and AI-created content is another massive step-change beyond that).

AI: time ➡️ rank ➡️ recommend ➡️ generate

Imagine environments that are not drawn by artists but rather created by AI: this not only increases the possibilities but crucially, decreases the costs.

The third trend is the change in interaction models from user-directed to computer-controlled.

UI: click ➡️ scroll ➡️ tap ➡️ swipe ➡️ autoplay

One of the reasons Instagram got itself in trouble over the last few months is by introducing changes along all of these vectors at the same time.

The company introduced more videos into the feed (Trend 1), increased the percentage of recommended posts (Trend 2), and rolled out a new version of the app that was effectively a re-skinned TikTok to a limited set of users (Trend 3). It stands to reason that the company would have been better off doing one at a time.

It seems likely that all of these trends are inextricably intertwined.

👉 An Interview With Daniel Gross and Nat Friedman About the Democratization of AI (Stratechery)

❓Why am I sharing this article?

I found the DRI concept a lot more interesting than in previous descriptions. I love the fact that you are only DRI of a topic for a time.

How we are at the beginning of AI capabilities, and a lower cost than expected to train certain models

DRI :

Apple has a very unique way of building an org where there’s the org structure and then there’s this thing called the DRI model, which is a virtual org built on top of it with no guaranteed annuity. Every year, there are different DRIs for different big things at Apple.

It was Steve [Jobs’] systematic way of ensuring both accountability and the ability to highlight and have people be responsible for projects in the organization regardless of where they were.

A DRI basically means you’re responsible for doing these things, usually called a tent-pole, a big thing for us this year, and you’re not guaranteed it next year. You’re not stuck in this tenured problem that you traditionally have with org charts where you promote someone and you basically can’t really demote people.

The stage of AI:

I had this experience of, with a great team, taking what essentially from OpenAI was a research artifact and figuring out how to turn it into a product and it was so successful. People love using Copilot.

The situation that we’re in now is the researchers have just raced ahead and they’ve delivered this bounty of new capabilities to the world in an accelerating way, they’re doing it every day. So we now have this capability overhang that’s just hanging out over the world and, bizarrely, entrepreneurs and product people have only just begun to digest these new capabilities and to ask the question, “What’s the product you can now build that you couldn’t build before that people really want to use?”

Six months to a year later, they successfully trained this Stable Diffusion model. I think, actually, the total cost, Emad has talked about this publicly of training that model, was in the low millions of dollars. When you train these models, you make mistakes, you have bad training runs, things won’t converge, you have to start over, and it includes all the errors.

👉 A Decade of Deep Learning: How the AI Startup Experience Has Evolved (Future)

❓Why am I sharing this article?

I think we should push our internal team to become ML stars, we have the full capacity to do so.

I love the fact that we are also stepping up on the topic.

And then if you are a practitioner and you want to get into it, there are a ton of really exciting new online classes, videos, and platforms. There’s so much material out there now. Even the Stanford CS224 NLP lessons are out there, so you can go quite deep if you want to. That’s what I’d encourage folks to do.

Once you’ve done that, the next level is to just get your hands dirty and program something, and play around with these models. Think about what kinds of processes and tasks people are currently doing manually, or sometimes maybe mechanically, but still requiring a human to oversee. Could you automate those and build something unique?

👉 How AI Copywriters Are Changing SEO (Future)

❓Why am I sharing this article?

How would you change our SEO approach based on the power of Machine Learning and AI? How could we drop the cost of producing an article by 10?

What do you think?

The advent of cheap, easy-to-use AI copywriting tools enabled by GPT-3 has changed the game entirely. We’re just now beginning to see the effects of companies using AI to produce written content en masse.

AI tools like Jasper and CopyAI

AI tools can be used to generate content that is indistinguishable from human output. But while AI-generated content is typically grammatically correct, AI copywriters are not always factually accurate.

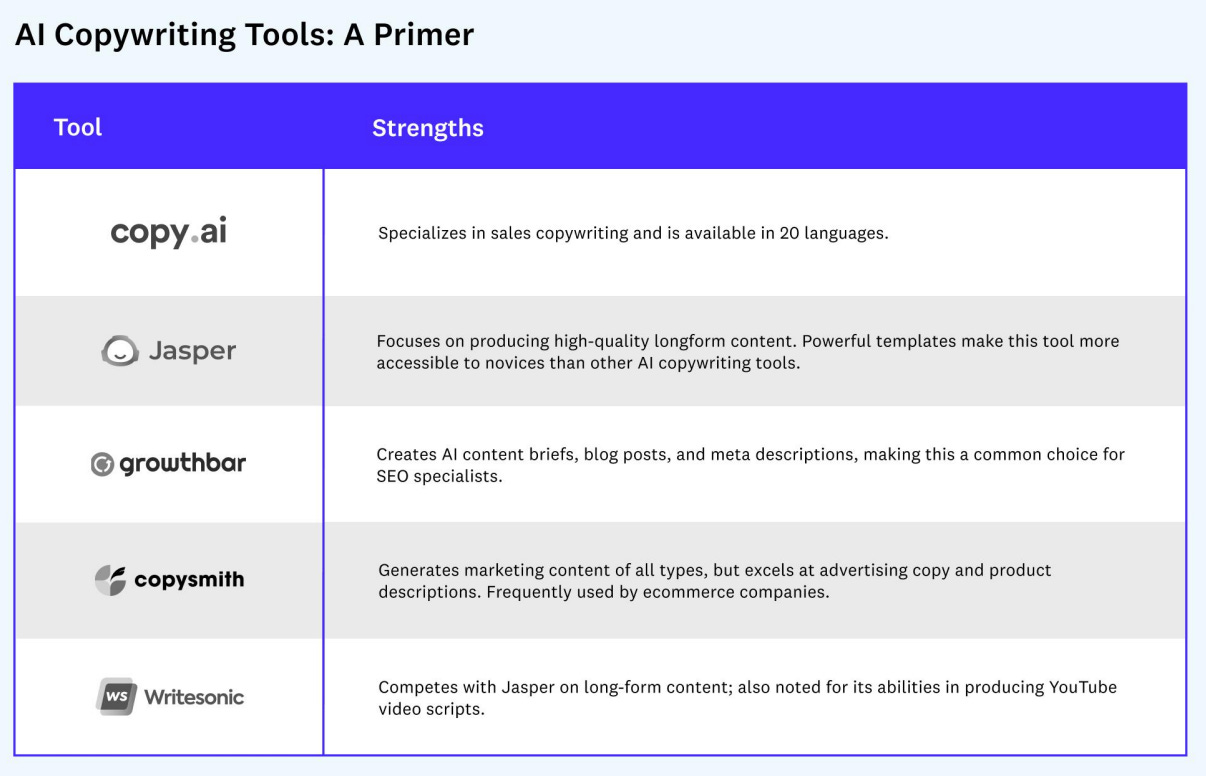

Many different types of AI copywriting tools are available, including Copy.ai, Jasper, GrowthBar, Copysmith, and Writesonic, among others.

AI copywriters are not a replacement for human writers — they are best used as an assistant to improve on human efforts. This means that while they can help with some aspects of writing, they should not be solely relied on to produce content in their current state.

👉 Bill Gates (1995) : The Internet Tidal Wave (Memos)

❓Why am I sharing this article?

I think it is the same for Machine Learning when I see what is happening all around us.

How should it affect our strategy? How do we build?

The next few years are going to be very exciting as we tackle these challenges are opportunities. The Internet is a tidal wave. It changes the rules. It is an incredible opportunity as well as an incredible challenge. I am looking forward to your input on how we can improve our strategy to continue our track record of incredible success.

👉 Crafting Prompts for Text-to-Image Models (Towards Data Science)

❓Why am I sharing this article?

These new text-to-image models are life-changing to unleash creativity.

They include application names like Octane Render or Unreal Engine and artist names like Craig Mullins. You can find a more detailed prompt analysis in this notebook.

Our model is freely available on HuggingFace at succinctly/text2image-prompt-generator. Feel free to interact with the demo!

👉 Hybrid intelligence in healthcare (Scale-free)

❓Why am I sharing this article?

Interesting take on how to make ML work in healthcare

In medicine, it is often assumed that human+AI centaurs are more accurate than either on their own. A typical research study goes something like this:

1) code up a deep learning model to classify pathology on medical imaging,

2) demonstrate that the algorithm performs on par with human physicians,

3) show that the patterns of errors made by humans and AI are non-overlapping, and therefore

4) conclude that combining human and AI predictions results in the best performance.

Perhaps unsurprisingly, it turns out the story is more nuanced in medicine. How do we optimally integrate AI into a clinician’s workflow to minimize harm and improve patient care? What is the most effective process for human-AI collaboration? Such questions are understudied, but the details of interfacing AI and humans in medicine are critical.

Andrew Ng's group at Stanford published similar findings in pathology; a deep-learning algorithm designed to assist pathologists in the diagnosis of liver cancer negatively impacted performance when the AI prediction was incorrect.

In summary, trust between physicians and AI was a key factor in driving adoption. Trust was established through experience; like in chess, physicians developed mental models for the strength and weaknesses of the AI by observing the system in a range of clinical scenarios.

It’s already over! Please share JC’s Newsletter with your friends, and subscribe 👇

Let’s talk about this together on LinkedIn or on Twitter. Have a good week!